How confident are you in your ability to detect artificial intelligence within the tools you use daily?

According to a Pew research study, Americans are aware of the common ways they might encounter AI in their day-to-day routines, but only three out of ten US adults were able to correctly identify all six uses of AI asked about in the survey. AI in the classroom has been a hot topic this year, so we looked at whether the same held true for teachers.

Building up our awareness of how AI is shaping the world around us (and has been for years) is the first step towards being able to have more informed, constructive conversations around how AI fits best in the classroom. To help you better understand where AI is already being used in your daily routine, here are five ways you’re already leveraging AI in the classroom but may not even realize it:

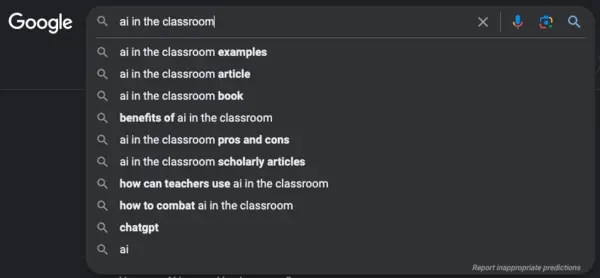

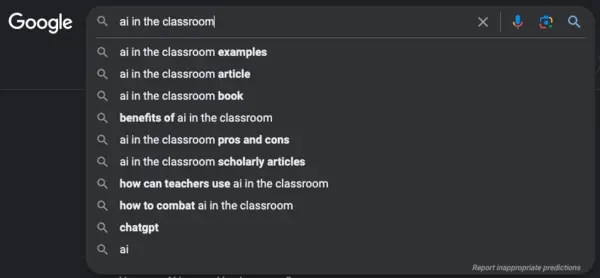

1. Searching online

For most of us, Googling something has become as instinctive as breathing, whether you’re at home or at school. And although typing a question into Google Search doesn’t work quite the same way as asking ChatGPT, Google does use AI to improve the accuracy and relevance of its search results, and they’ve been doing so since 2015.

Google uses machine learning algorithms to understand and interpret the queries typed in by users in native languages from all over the world (Google Translate is entirely powered by AI). Machine learning also improves the accuracy of Google Search’s autocomplete suggestions, which is why those suggestions can sometimes feel eerily personalized, along with the results you see fed back to you. AI is enabling Google to make an educated guess on what you’re likely interested in, based on factors like your search history, your location, and past interactions with other Google products or services.

2. Spell-check and auto-correct

No matter the platform you’re typing in–Google Docs, Microsoft Word, or even iMessage–you have AI to thank for those auto-correct suggestions.

To build out their spell check and grammar suggestion capabilities, Google consulted with experts in computational and analytical linguistics who reviewed thousands of grammar samples and identified common patterns and corrections. Those patterns were then fed into statistical learning algorithms along with examples of “correct” texts gathered from trusted sources, which led to the development of their spelling and grammar correction models. These models are still in use today, and they’re constantly learning from user behavior to make improvements on the fly.

Auto-correct was actually one of the first iPhone features to utilize machine learning. Your iPhone can predict what you’re trying to type, giving you recommended words, emojis, or information that can be inserted with one tap. In 2023, Apple announced that their keyboard will now leverage a new “transformer language model” that significantly improves word prediction as you type. It also learns your most frequently typed words, including swear words, so your days of accidentally saying “what the duck” might finally be coming to an end.

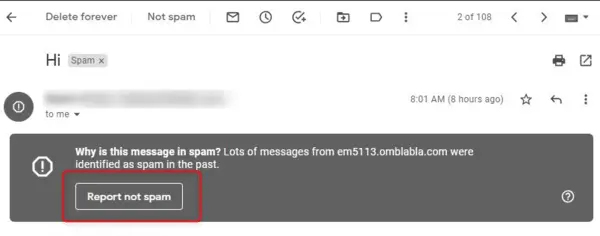

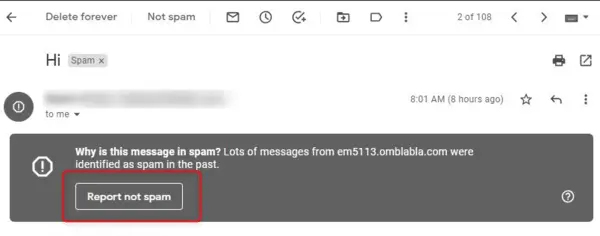

3. Email filtering

Even as other forms of communication come and go (RIP Twitter), email seems to be here to stay. You likely use it every day both at work and in your personal life, but you may not realize that seemingly mundane features like spam filtering are actually made possible through AI.

Google is very proud of Gmail’s spam filtering functionalities, which they say block “nearly 10 million spam emails every minute” and “prevent more than 99.9% of spam, phishing attempts and malware from reaching you.” AI algorithms play a crucial role in this process by accurately identifying and blocking spam emails, using models trained on known examples from spammers. The AI analyzes a multitude of factors, like the sender’s identity, the content within the email, and the recipient’s past email behavior, in order to decide if an email is likely to be spam. And if it marks an email as spam incorrectly, make sure to click “Not spam,” because the algorithm is continuously learning from your feedback and will use that information to refine its approach for next time.

4. Text-to-speech and transcription tools

Text-to-speech (TTS) tools are widely used in schools to accommodate students who benefit from audio support during classroom time. These tools rely heavily on the advancements of AI, particularly in recent years. ElevenLabs, a company that specializes in “natural-sounding speech synthesis and text-to-speech software,” explained how their product uses machine learning: it first analyzes the written content that needs to be spoken aloud, comparing it to the information in its models to gain an understanding of the structure, context, and phrases used so it can then interpret how it should be spoken. That analyzed text is then converted into spoken language using a large database of small speech segments (phonemes). Lastly, their software links those phonemes together to construct complete words and sentences.

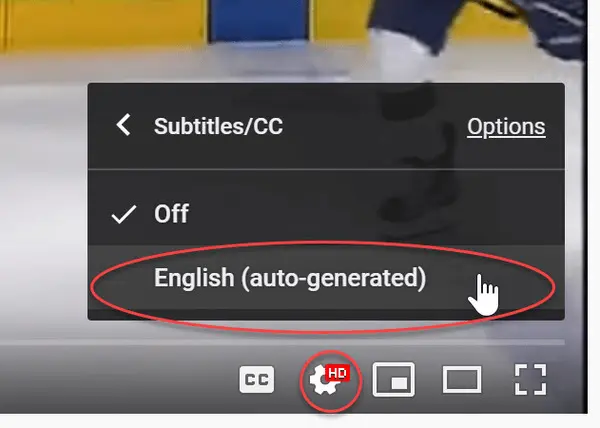

If you’ve ever used the auto-generated captions on a YouTube video, or recorded a meeting transcription at work, much of the same AI technology is being employed, just in reverse. Advanced natural language processing (NLP) algorithms and machine learning models are used to analyze audio inputs and compare them to previous examples in order to generate accurate transcriptions of what’s being said.

5. Music and video recommendations

When you get to the end of a YouTube video, you’re rewarded with a plethora of recommendations that feel tailor-made to you and what you want to watch. This is no accident–yep, it’s AI at work once again.

This essay from a Harvard alumni breaks it down well: YouTube recommendations employ a two-sided AI algorithm. The first side covers candidate generation, which it does by understanding a user’s history and comparing it with other users’ stats like how many videos were watched, or what types of videos were watched. The second side of their algorithm is a ranking network, which pores through millions of videos while also scaling down to individual users to provide them with the content they’re most likely to find interesting. These rankings are constantly changing as YouTube measures the time users spend watching certain videos and which videos are receiving clicks. If a video is proving popular with users like you, it will move up in the rankings and likely be served right to your device.

If you’re not watching videos but instead listening to music via Spotify or Pandora, you’re also using AI without knowing it. They’ve both been using AI to recommend songs to their users for years, as Pandora’s vice president of data science at the time told Forbes in 2019. Pandora’s algorithms examine music for up to 450 unique attributes “ranging from simple categories like genre to nuances like the complexity of melodies and the nasality of a singer’s voice” and use that evaluation to determine if you’ll likely enjoy listening to it.

Spotify even released an AI DJ earlier this year, which speaks directly to the listener in an uncannily human-sounding voice, recommending tracks it thinks you’ll love. Check it out if you haven’t yet–it’s oddly soothing in its imitation of the smooth-talking radio DJs we grew up with.

Level up your AI awareness

Now that you have some examples of how AI in the classroom is already part of your routine, you’ll be better equipped to spot other applications of AI in your daily life. For better or worse, AI is here to stay, so it’s important to keep tabs on where it’s being used in your classroom. Try getting into the habit of asking yourself “is this AI-based?” when you discover a nifty new tool. It’s not a bad thing if it is–in fact, the examples above demonstrate how much positive value AI has the potential to bring us–but you’ll definitely want to evaluate it with the same rigor you apply to any new product before introducing it to your students.

Keep your finger on the pulse of the AI evolution and its impact on the K-12 community by subscribing to EdTech Evolved.